Most AI products today are built for convenience: summarize this, rewrite that, suggest a few options, answer a question. These tools live in low-risk environments where errors are annoying, not dangerous. In medicine, finance, and research, the stakes are fundamentally different.

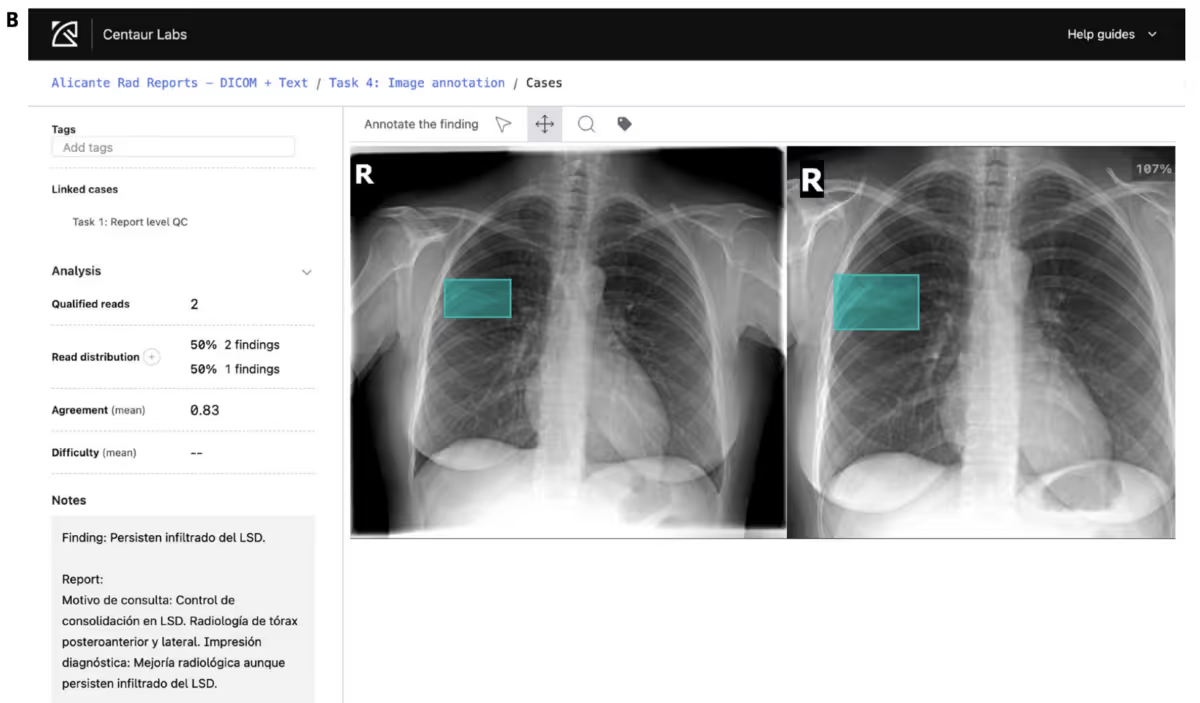

Today, AI in medicine is already assisting clinicians with tasks like reading radiology scans, summarizing clinical documentation, flagging anomalies in vital signs, and predicting patient risk. These systems generate preliminary interpretations, but clinicians remain responsible for all final decisions. This reflects a broader trend: high-stakes AI succeeds only when it supports clinical judgment, not when it attempts to replace it.

These deployments aren’t general chatbots. They involve retrieval-augmented systems, anomaly detectors, predictive models, or workflow-specific agents. They involve sensitive data, high regulatory burden, and workflows where a wrong answer can’t be brushed aside.

This shift mirrors a broader industry trend: teams are moving away from one all-purpose chatbot and toward small, specialized agents with clearly defined roles. It’s the same pattern we explored in our recent article on From One Big Chatbot to Many Small Agents: What This Means for UX—a transition that becomes even more important in high-stakes environments where ambiguity simply isn’t acceptable.

As these more focused AI systems enter regulated, safety-critical, or deeply technical domains, UX stops being about surface polish. It becomes about structure, constraints, and domain-aware design. These elements determine whether an AI system behaves predictably and supports, rather than replaces, expert judgment.

Why High-Stakes AI Requires a Different UX Mindset

One of the biggest challenges highlighted by medical researchers is biased training data. If models are trained on narrow or unrepresentative datasets, they risk reinforcing existing health disparities. UX plays a critical role here: interfaces must reveal uncertainty, highlight assumptions, and encourage clinicians to double-check outputs for populations that may be underrepresented in the data.

Beyond data quality concerns, high-stakes AI also operates under strict compliance and safety requirements that consumer tools never encounter:

- Regulation (HIPAA, FDA, SEC, FINRA, CLIA)

- Auditability requirements (logs, traceability, reproducibility)

- Safety standards (medical device UX rules, industrial safety protocols)

- Human oversight obligations (AI must support, not replace, expertise)

Even leading AI research organizations acknowledge the risk of applying generalized models to specialized domains without guardrails. Scientific and medical publications repeatedly emphasize that large models require tightly controlled workflows and domain context to avoid errors, misinterpretations, or fabricated rationales.

This shifts UX from “make it easy” to “make it impossible to misunderstand.”

In high-stakes environments, UX must constrain AI behavior, reveal uncertainty clearly, explain how outputs were generated, support verification workflows, and prevent automation from outrunning human judgment. These requirements are design problems first, model problems second.

General-Purpose UI Patterns Don’t Work Here

Most AI interfaces today follow the same formula: a chat box, a long LLM output, and a few suggested prompts. This pattern works for flexible, exploratory tools, but it fails for science, finance, and medicine because those fields depend on precision, sequence, and verification.

A clinician cannot rely on a paragraph-long speculative answer. A scientist cannot accept an insight without reproducibility. A compliance team cannot adopt a tool that cannot show its work. In these environments, the UI must shape the AI’s role, not the other way around.

A few examples:

In clinical tools:

- AI must surface citations, evidence links, and domain constraints.

- UI must separate facts from model-generated inference.

- Uncertainty must be communicated visually and explicitly.

In scientific research tools:

- AI must describe assumptions, data limitations, and alternatives.

- UI must support step-by-step reasoning, not just answers.

- Users must be able to drill into source calculations.

In industrial and operations tools:

- Agents must not execute actions without confirmation.

- Interfaces must show potential risks or downstream effects.

- Logs need to be visible, filterable, and auditable.

These are not “chat experiences.” They are structured workflows where AI acts as a supporting analyst.

Medical AI experts consistently stress the importance of explainability where clinicians must be able to see how and why an AI arrived at a recommendation. In high-stakes environments, opacity is dangerous. UX must therefore support layered explanations: what data was used, what reasoning steps were followed, and where uncertainty or disagreement exists.

Why Domain Expertise Matters More Than Dribbble-Ready UI

A beautifully polished interface does not protect a user from a wrong dosage, a flawed financial insight, or an inaccurate research summary.

High-stakes AI requires UX teams to understand how real professionals work:

- What steps exist in the workflow

- Where decisions get made

- What data is required at each stage

- What failure looks like

- What verification looks like

- What “human oversight” actually means in context

In these products, workflow fidelity is more important than visual novelty.

For example:

- A clinical decision-support agent should never re-order steps in a workflow for “efficiency.”

- A financial analysis agent cannot hide uncertainty behind confident phrasing.

- A research-triage agent must not collapse complex findings into oversimplified summaries.

Domain knowledge informs constraints, and constraints are what keep AI safe.

This is why organizations in medtech, fintech, and research increasingly seek UX teams that can help structure decisions, not just interfaces.

The Harvard report reinforces this point: clinicians remain the final decision-makers. AI tools cannot reorder diagnostic steps, bypass medical reasoning, or present insights without sufficient clinical context. UX must reflect real clinical workflows, including the way doctors confirm diagnoses, compare differential possibilities, or escalate a case to a specialist.

Design Principles for High-Stakes AI UX

The industry is beginning to coalesce around a few principles for designing AI in regulated or sensitive environments. These are grounded in published guidelines from healthcare UX research, safety-critical software design, and human-AI interaction frameworks.

1. Constrain the system

AI should not be free to improvise. Give it narrow roles, limited actions, and explicit boundaries.

2. Separate fact, inference, and uncertainty

Clinicians, scientists, and analysts must see:

- What is retrieved

- What is generated

- What is uncertain

- Where evidence comes from

3. Force verification, don’t assume it

Build verification steps directly into the interface. Make it easy to cross-check sources.

4. Support traceability and medical-grade auditability

Healthcare organizations require traceable, auditable reasoning steps. Users must be able to review not just the output, but the data sources, statistical assumptions, and risk factors that shaped it. This mirrors regulatory expectations for any system influencing diagnosis or patient care.

5. Avoid passive automation

AI must not take action silently. High-stakes automation always requires confirmation.

6. Make failure safe and visible

When the model is wrong, the interface should help users recover, not hide the error. These are UX patterns, not model patterns.

How AX Strengthens High-Stakes Design

High-stakes environments benefit from multiple specialized agents instead of one general one. Each agent can:

- address a specific step in the workflow

- operate with clear boundaries

- be tested and audited independently

- communicate its role with less ambiguity

In clinical and research settings, this often means assigning narrow responsibilities to specific agents: one agent may summarize lab results, another may highlight guideline pathways, and another may flag risk patterns based on vitals or history. Clear boundaries make each agent more testable, predictable, and auditable.

This mirrors Composite’s work on agent-readable design, or AX. When the architecture is clean and the components are consistent, agents behave more predictably. Read our article Designing for AI Agents: How to Structure Content for Machine Interpretation for more on AX.

Why Structured Architecture Matters (and Why Webflow Is Useful Here)

AI agents cannot operate reliably on messy or ambiguous UI.

This is especially evident in healthcare settings, where AI tools often fail not because the model is weak, but because electronic health record data is fragmented, inconsistently labeled, or partially unstructured. When the underlying architecture is unclear, clinicians cannot trust the system, and the AI cannot reason about the data safely.

These agents need:

- semantic structure

- repeatable components

- consistent labeling

- well-defined data fields

- predictable relationships between screens or pages

Platforms like Webflow enforce many of these patterns by default: clean HTML, structured CMS fields, reusable components, and controlled semantics. That’s one reason a Webflow agency or UX design agency can build agent-ready interfaces faster and with more predictability.

In high-stakes environments, structure isn’t for aesthetics, it’s for safety.

Where High-Stakes AI Is Going Next

As tools evolve, the UX layer will determine whether AI is trusted. Every trend points toward smaller, more domain-specific agents, more explicit workflows, tighter constraints, explainable reasoning, auditability and traceability built into the product, and teams blending domain experts with UX and engineering.

We’re moving into an era where AI doesn’t replace human expertise, but reinforces it. That only works if the UX is designed to support real-world decision-making, not generic chat interactions.

As regulatory frameworks evolve, UX will increasingly serve as the layer that ensures AI systems behave safely by documenting reasoning, constraining automation, and supporting the level of oversight required in fields like medicine, biotech, and finance.

The teams that treat UX as a safety layer, not a cosmetic one, will build the AI systems people can actually trust.