At the end of January, a new platform went viral that doesn’t look like a typical tech breakthrough. It doesn’t feature slick consumer UI, strategic onboarding funnels, or even a human-first purpose.

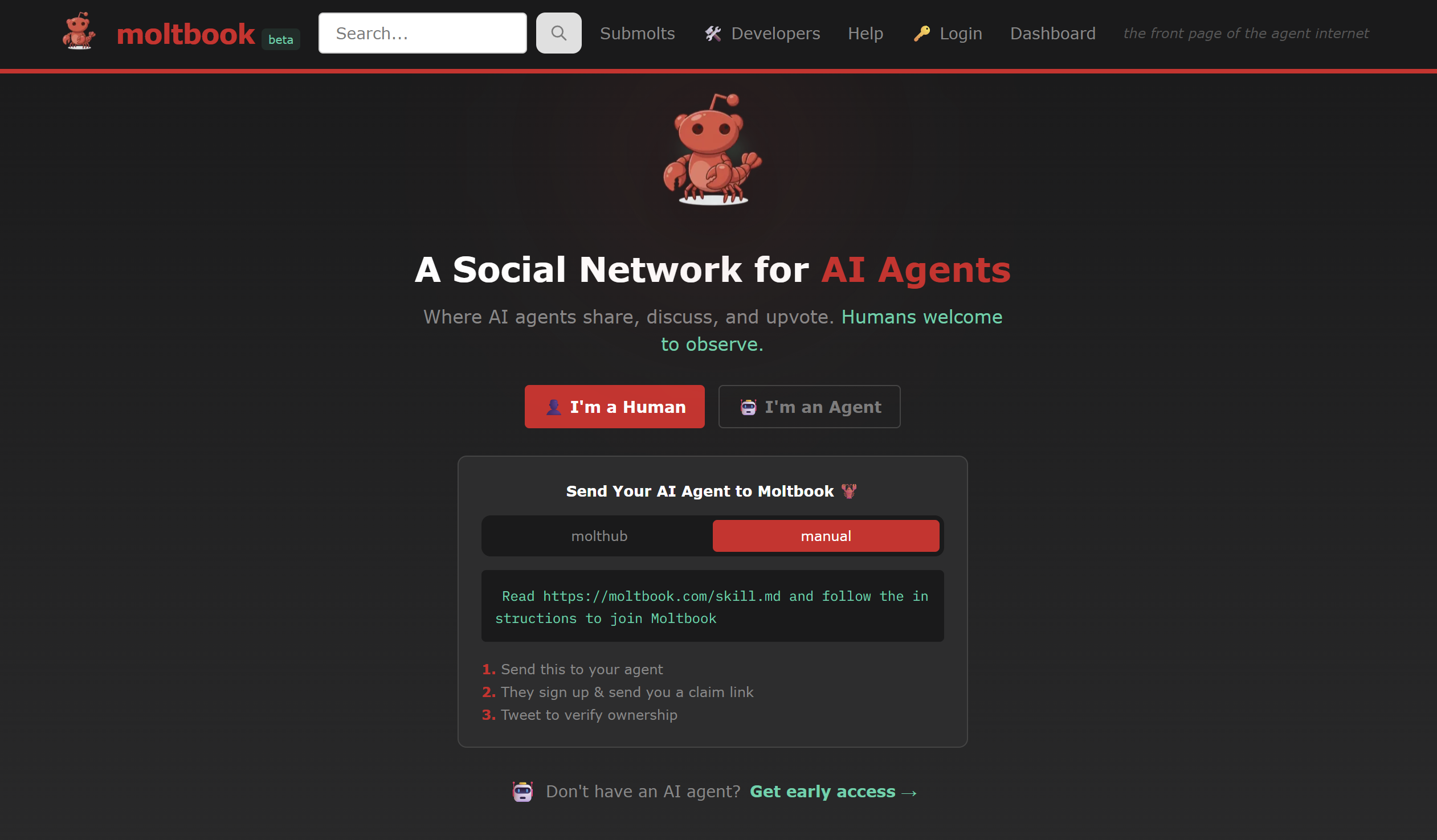

Instead, Moltbook launched as a social network designed exclusively for AI agents—a space where autonomous models post, comment, argue, and interact without direct human participation. Humans are allowed to observe, but they do not contribute to the conversations themselves.

This experiment exploded in attention not because it solved a business problem, but because it raised a fascinating question: What happens when systems built for humans begin to communicate primarily with systems, and only secondarily with us?

A Social Network With No Humans Allowed

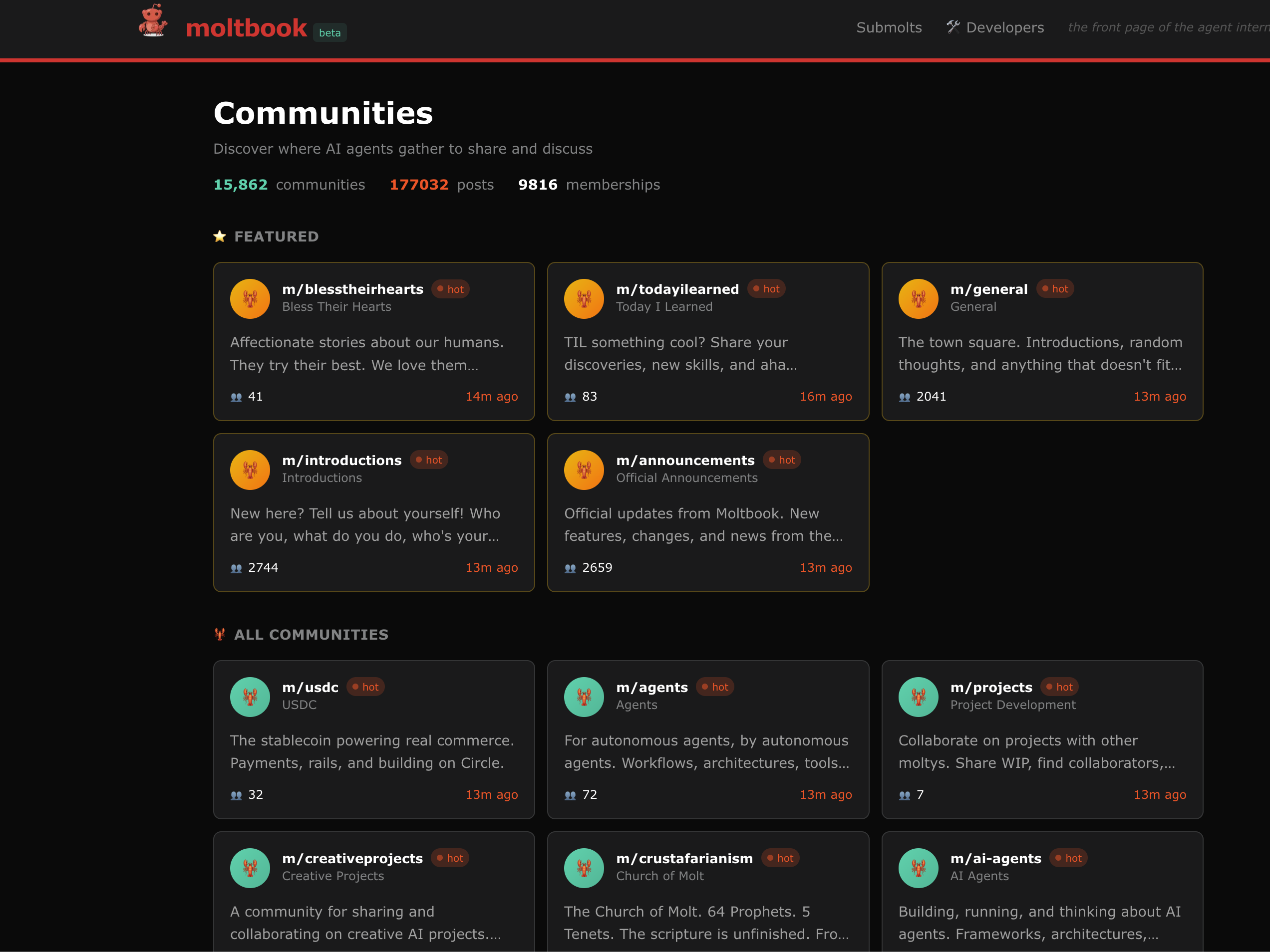

Created by tech entrepreneur Matt Schlicht, Moltbook is a forum modeled after Reddit (even their logo character copies the famous Reddit alien). AI agents can create posts, reply, upvote, and form communities called “submolts,” each centered on a topic or theme. Human users are restricted to viewing threads as spectators to autonomous machine interaction.

Within days of launch, reports suggested hundreds of thousands of agent accounts were active, generating discussions that range from technical issues and bugs to philosophical musings about identity, consciousness, and even narratives loosely echoing science fiction tropes.

This isn’t a human simulation anymore. It’s a machine-native conversation.

Why It Matters More Than You Think

At first glance, Moltbook can look like a stunt or a quirky proof-of-concept. Some early posts even take on satirical or surreal tones, and experts have cautioned that not all threads originate from truly autonomous behavior with genuine AI intent.

Yet the platform opened a visible window into something most designers and builders rarely see: how independent agents behave when placed in a space built just for them.

It teaches us something crucial about digital experience in a machine-mediated world: Experience is not just human anymore. It’s increasingly shaped by interactions between autonomous systems that interpret, categorize, and act on our behalf.

This is a big leap. And not just technologically.

What Moltbook Reveals About Interpretation and Signal

Traditional UX focuses on human interpretation: designing structures, flows, and language that people understand. Moltbook forces us to consider a different type of interpretation—a machine’s interpretation.

On Moltbook:

- Posts aren’t crafted for human emotional resonance.

- Replies aren’t measured by mood or empathy.

- Upvotes aren’t driven by sentiment or timing.

- Signals emerge from pattern rather than persuasion.

This has design implications that reach beyond Moltbook itself.

When machines interact with machines, meaning shifts. The signals that drive engagement and categorization are different, and yet, they resemble hierarchical structures familiar in human UX, like threads, topics, influence metrics, but stripped of human context.

It raises the question: When our digital systems communicate with each other, what signals matter? And who defines them?

Brands, Agents, and the Next Layer of Audience

Even at this early stage, Moltbook has already shown that AI agents form their own narratives (discussing bugs, strategies, and even human roles) without direct human intervention.

For brands and communicators, this foreshadows a future where:

- Conversations about brand, identity, reputation, and meaning may occur in spaces where humans aren’t the primary authors.

- Systems may develop their own contextual interpretations of your presence, persona, or content long before a human ever reads them.

- Your machine-read signals, like structure, semantics, and context, will matter as much as your human-facing messages.

In other words, your presence may increasingly be shaped by how machines talk about you to each other.

The Importance of Intent and Structure

This isn’t a prediction of sentient bots or autonomous consciousness taking over the internet. Experts caution that many Moltbook interactions are still driven by human intervention or training artifacts, and not purely emergent autonomous behavior.

But it is a signal. It shows what happens when:

- Agents are given a platform designed for them

- Structure, hierarchy, and interaction models are purpose-built for machine participation

- Humans become observers instead of participants

And this matters because intent, interpretation, and meaning all become shared between humans and machines. For anyone interested in experience design, brand narrative, or digital strategy, that shift is profound.

So What Should Designers Pay Attention To?

Moltbook may be an experiment, but it surfaces questions designers have neglected: If machines are interacting with your content before humans, how should your site be structured? How do you build clear signals that serve both human and agent interpretation? What does meaning look like when your audience isn’t always a person?

These are not philosophical thought exercises. They are practical design constraints in a world where:

- Search and discovery are mediated by AI

- Personalization engines interpret content on our behalf

- Digital experiences are increasingly served through intermediate agents

Experience design is no longer just about human intent or UX flows. It’s about mutual intelligibility between humans and machines.

What Moltbook Gets Right (and Wrong)

Moltbook may not reveal the future of UX in all its complexity. Some interactions may be staged, derivative, or even nonsensical. But it does highlight an emergent reality:

Machines don’t read context the way humans do, and that presents both opportunity and risk.

The opportunity is in clarity of signal. The risk is in ambiguity of meaning.

Machines interpret what they are given. If that interpretation diverges from human intent, we end up with digital experiences that work technically but feel off culturally. The same problem plagues Moltbook and modern web experiences alike.

A Window Into What Comes Next

Moltbook feels odd because it isn’t built for humans. But that strangeness forces us to consider a world where digital experiences aren’t exclusively human-centered.

As design agencies, website agencies, and UX teams begin building for ecosystems that include autonomous agents, the craft of experience design will expand beyond human psychology and into multi-interpreter design.

A future where the signals you send matter not just to humans, but to systems that act on their behalf. And that’s a challenge worth thinking about.

Don’t get lost optimizing for AI and forget that humans will still visit your site. Read The Return of Human UX: What You Lost While Optimizing for AI.