Agent Experience started as a technical conversation. How agents read content, how they extract meaning, and how they decide what to surface, summarize, or recommend. Early AX discussions focused on structure, consistency, and machine-friendly patterns. All necessary and useful, but something is shifting.

Teams are realizing that AX doesn’t exist in isolation, it inherits decisions made at the UX level, whether those decisions were intentional or not. AX is growing up, and it’s running into UX.

AX Is Built on UX, Not Beside It

Every AI agent interacting with your site is responding to signals created by human-facing design decisions. Hierarchy tells agents what matters, language signals intent, navigation reveals relationships, and redundancy creates ambiguity.

AX is not a new layer, but a reflection of the UX underneath. When UX is unclear, AX becomes unreliable. When UX is intentional, AX becomes predictable.

This forces a choice for teams working on AX: Do you treat UX as something to work around, or as the system your agents must learn from? If your UX relies on cleverness, ambiguity, or persuasion-first language, your AX will inherit those same weaknesses.

When UX Breaks, AX Gets Noisy

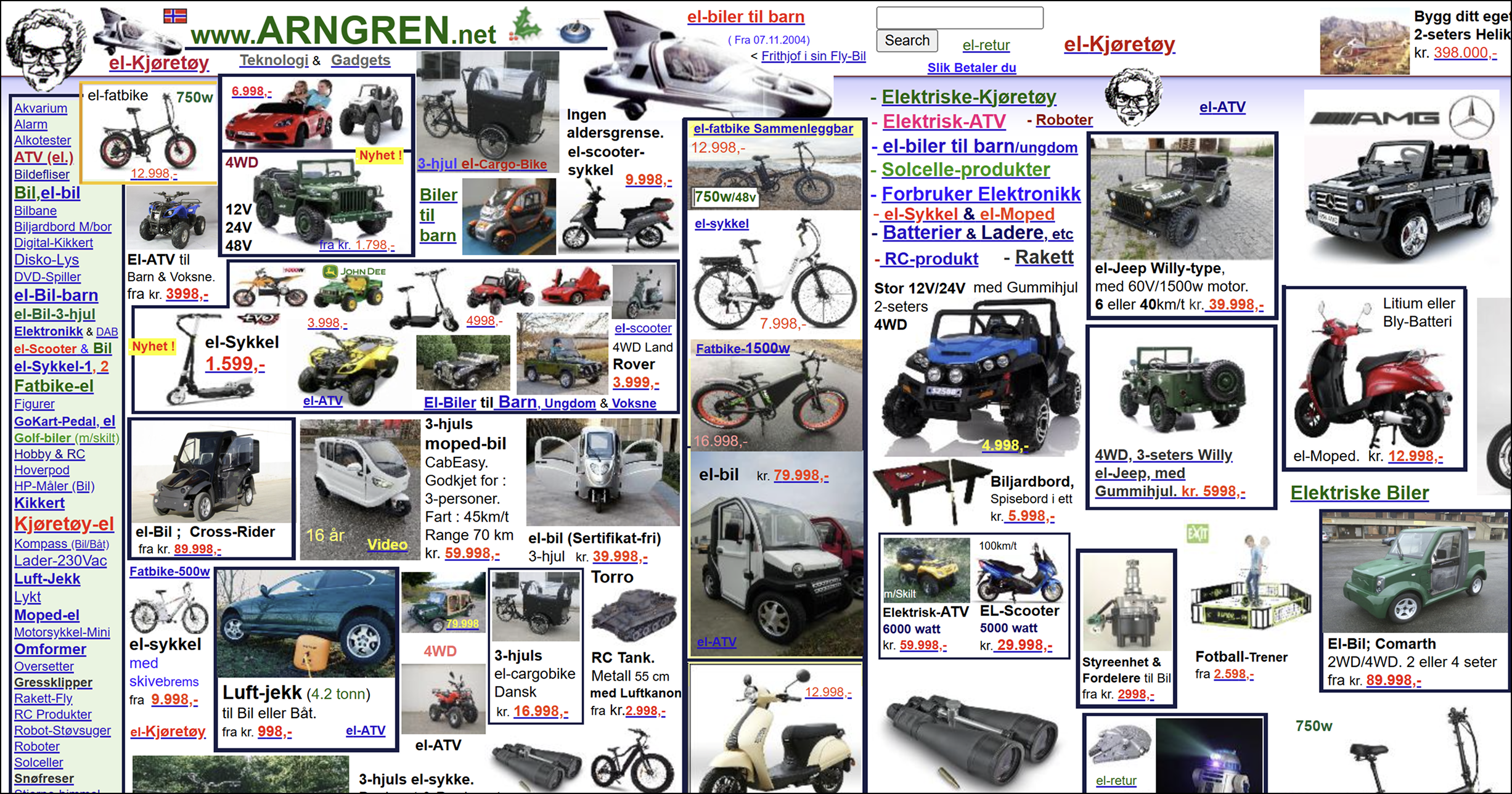

Most AX issues don’t come from lack of structure. They come from competing signals like multiple CTAs with similar weight, headlines that sound clever but mean little, or content written to persuade instead of explain.

Humans can often work around this but AI systems cannot. This is where UX and AX collide. The same friction that causes user hesitation causes agent misinterpretation.

When AX fails, it doesn’t fail loudly, it fails quietly. Agents surface the wrong pages. Summaries flatten nuance. Recommendations drift toward the most generic interpretation of your content.

The result isn’t just poor machine understanding. It’s lost trust, diluted authority, and missed opportunities that are hard to trace back to a single cause.

Accessibility, UX, and AX Are Converging

The overlap between accessibility and AX is becoming impossible to ignore. Both require semantic structure, clear labels and predictable flows. These practices improve accessibility, UX clarity, and agent interpretation at the same time.

AX maturity isn’t about inventing new frameworks. It’s about respecting the fundamentals UX teams already know, and applying them consistently.

AX Is a Stress Test for UX Judgment

When Agent Experience breaks down, it’s rarely because teams missed a technical requirement. It’s often because the underlying UX relied on ambiguity.

UX judgment shows up in small, sometimes uncomfortable decisions: choosing one primary action instead of several, writing headlines that explain rather than tease, letting some content be informative instead of persuasive, or resisting the urge to optimize every section for conversion.

Humans can usually work around these choices. They infer intent, skim, and make assumptions. AI agents can’t.

When everything is emphasized, nothing is clear. When language prioritizes cleverness over meaning, interpretation drifts. When multiple actions compete for attention, agents struggle to determine what actually matters.

This is why AX surfaces UX weaknesses so quickly. It removes the benefit of human intuition and exposes how well your experience communicates intent on its own. Better UX judgment doesn’t mean simplifying everything, it means being deliberate about hierarchy, language, and focus. It means designing systems that explain themselves without relying on persuasion or flourish to do the work.

AX doesn’t demand new UX frameworks, it demands clearer thinking. If an agent misinterprets your content, there’s a good chance a human hesitates there, too. AX simply makes that hesitation visible. When your content is resurfaced incorrectly by agents, it’s a signal that meaning wasn’t clear to begin with.

The Future of AX Is Quietly UX-Led

The teams doing AX well right now aren’t reinventing UX. They’re refining it by writing clearer copy, reducing competing signals, designing calmer flows, and letting structure do the heavy lifting.

AX is growing up by standing on UX’s shoulders, not replacing it.

What This Means Going Forward

Going forward, AX becomes a useful lens for UX teams: if an agent misinterprets your content, a user probably hesitates there too. AX doesn’t introduce new problems. It exposes the ones UX already had.

Agent Experience isn’t a new discipline that replaces UX. It’s a stress test for it. If your UX holds up under machine interpretation, it will almost always hold up for humans. And if it doesn’t, the fix isn’t more optimization, but better design judgment.

Want your UX to feel human again?

We share thoughtful insights on UX, identity, and AI-ready systems, and help teams design websites that balance clarity, personality, and structure. Explore our services or reach out to start a conversation.