AI-driven discovery is quietly transforming how people find and evaluate websites. Instead of relying solely on search engines, users now ask systems like ChatGPT, Gemini, and Perplexity to analyze, summarize, and recommend information before a page is even opened. The result is a new kind of visibility, shaped not only by what you say, but by how clearly your site communicates its meaning to non-human readers.

AI doesn’t browse a website the way humans do. It doesn’t skim pages, react to visuals, or intuit hierarchy from design. It parses structure, interprets relationships, and looks for consistency in content, components, and labeling. And increasingly, it decides whether your brand appears in generated answers based on how confidently it can understand your site.

This shift requires an evolution in how websites are planned and built. Readability for humans and interpretability for systems are no longer separate goals; they’re the same. As a UX design agency and Webflow agency in NYC, Composite sees this convergence shaping the next decade of digital strategy.

Interpretability: The New Standard for Discoverability

The clearest signal of change is how AI responds to structure. Headings, content patterns, and semantic cues are no longer technical formalities but the foundation of whether AI can summarize or recommend your brand. When websites repeat headings, skip levels, or use style choices instead of true semantic hierarchy, models struggle to parse the meaning behind the design.

The same is true for content that’s written in dense paragraphs without clear breaks or modular structure. Large blocks of uninterrupted text might work visually, but they create ambiguity for systems attempting to extract intent or categorize information.

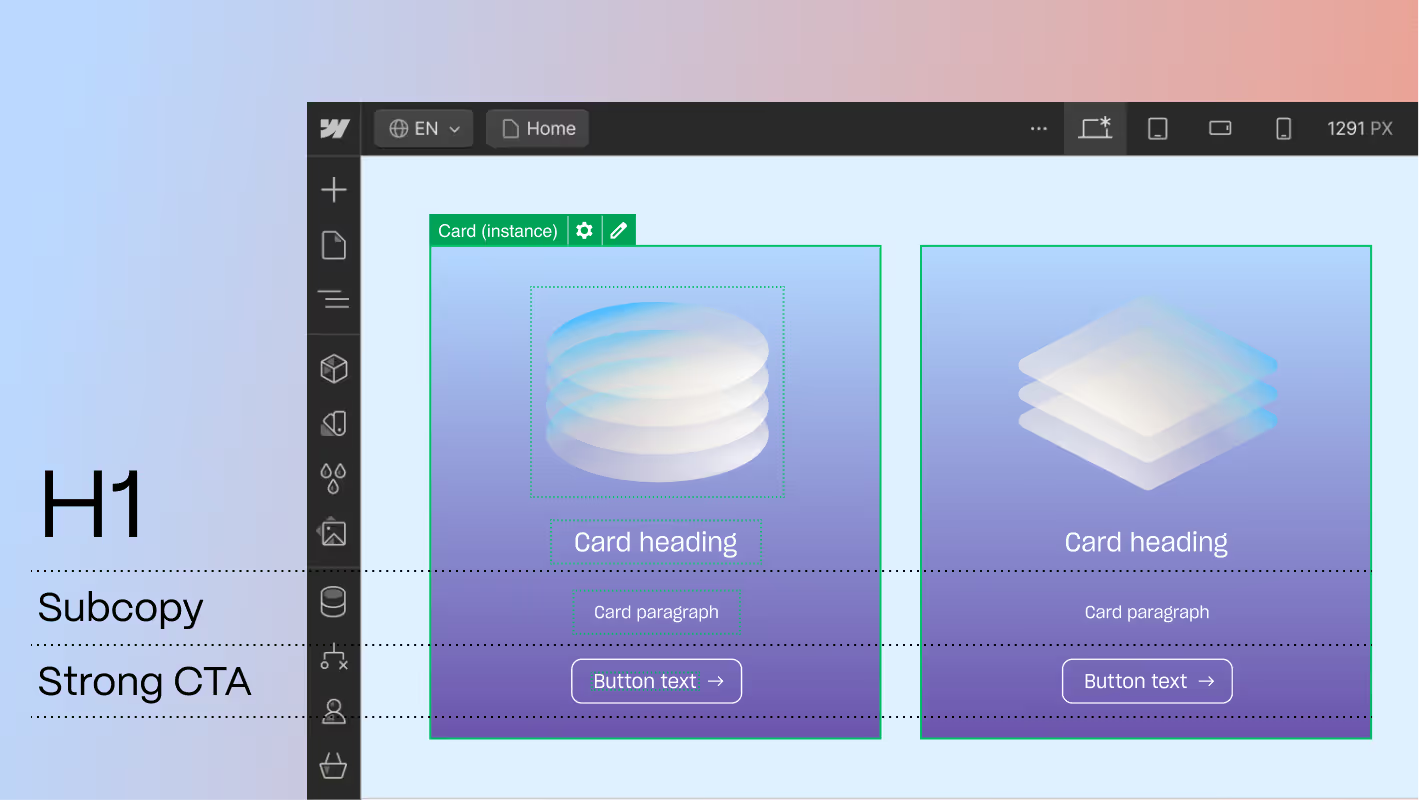

Even component names matter. When a section is labeled “Content Block” or “Section 1,” AI has no contextual anchor for understanding what lives there. But when components carry descriptive names like “Case Study Card,” “Insight Summary,” “Hero Section,” “Testimonial Block,” models can more accurately understand and group related information.

What emerges is a picture of a web where structure equals clarity and clarity equals visibility.

From Pages to Patterns

Successful sites today share something in common: they’re built as systems, not as isolated pages. AI respects consistency, and it rewards predictable relationships between content types.

When every case study follows a similar rhythm—overview, challenge, solution, outcome, and supporting media—models recognize patterns and treat the content as part of a coherent category. When each blog post includes the same metadata, the same labeled structure, and the same semantic hierarchy, AI can interpret the series as a unified body of thought leadership.

But when every page has its own layout quirks, naming conventions, and content logic, AI sees them as unrelated fragments. That’s why system-driven design built on rules, components, and semantics is becoming essential for long-term visibility.

This is the same shift Composite, a NYC-based UX design agency, has championed with modular design systems: when the underlying patterns are clear, everything built on top of them becomes easier to interpret for humans and AI alike.

The Anatomy of Machine-Readable Content

Machine-readable content blends structural clarity with editorial intention. Heading hierarchy becomes a navigational language rather than a stylistic choice, and content is broken into meaningful, self-contained segments that make it easy for systems to interpret. Because AI agents often traverse multiple pages to synthesize information, the structure across your site, not just within individual pages, shapes how confidently models can understand context. Clear segmentation also supports summarization within token limits, helping systems extract meaning without missing critical details.

Internal linking reinforces relationships between ideas, helping models understand which concepts are connected and which deserve emphasis. Metadata, alt text, and descriptive labels supply the contextual clues AI needs to confidently categorize and retrieve information. These cues also serve as early credibility signals, helping AI agents determine which brands demonstrate authority, coherence, and trustworthiness, factors that increasingly influence how content is ranked and retrieved.

CMS logic adds another layer of order. When articles, case studies, services, or migration guides are organized within collections, each with shared fields, patterns, and labels, the entire system becomes interpretable rather than scattered. As a Webflow agency in NYC, Composite relies on CMS-driven structure as the backbone of machine-readable design.

Structured data gives AI systems even more clarity. Schema markup helps models understand what a page represents instead of forcing them to infer meaning from headings or layout alone. It also provides machine-readable trust signals that reinforce authority, helping agents distinguish structured expertise from ambiguous content. Schema strengthens the separation of structure and presentation, ensuring meaning stays intact even when visual design evolves. It’s a small addition with outsized impact, and we break it down more fully in our schema-focused guide.

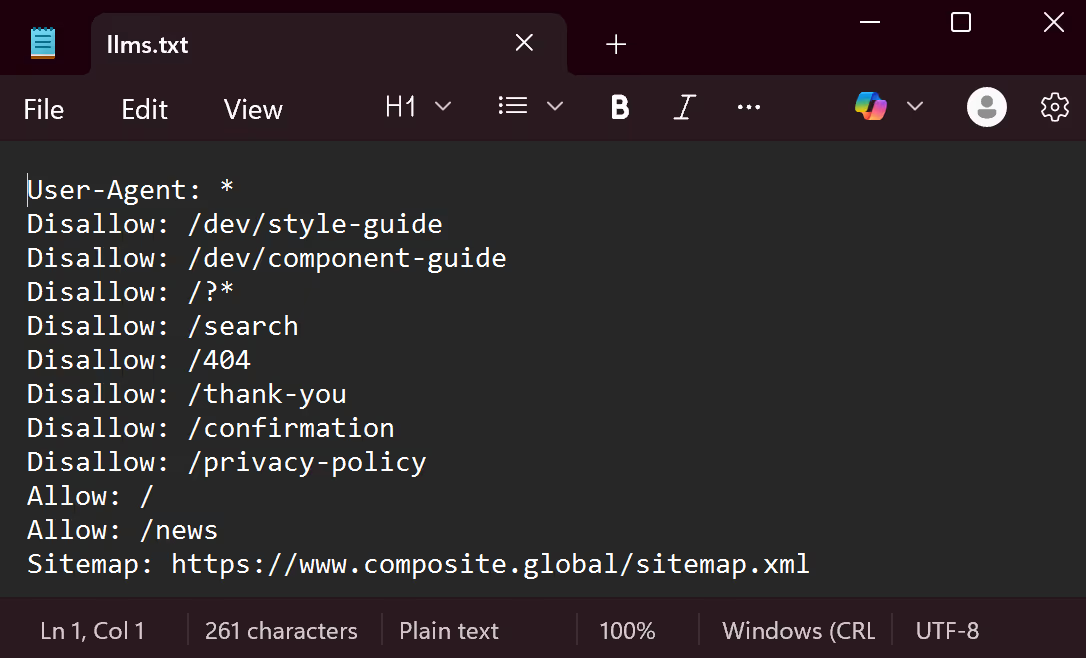

As AI agents become a primary discovery channel, brands also need a way to communicate directly with them. That’s the purpose of llms.txt—an emerging protocol that tells AI systems what content should be prioritized or ignored, how your site is structured, and which URLs matter most. It gives brands a direct path for shaping how agents crawl, rank, and interpret content before it’s summarized or recommended. It functions much like robots.txt, but built for AI assistants, and we explore how to create one effectively in our llms.txt article.

Machine-Readable Authority: The New Reputation Layer

AI systems don’t evaluate authority the way search engines do. Instead of looking for backlinks or keyword frequency, agents look for patterns of expertise across an entire domain. When your content is organized consistently and supported by clear metadata, schema, and predictable internal linking, systems can detect topical depth and brand coherence.

This structural repetition becomes a form of machine-readable reputation, helping agents determine whether your site is a credible source worth citing or recommending.

Where AI, UX, and Content Strategy Converge

The rise of AI agents hasn’t replaced content strategy or UX, it has merged them. Writers are now information architects. Designers are system thinkers. Developers are UX collaborators.

A website’s success depends not on the cleverness of its visuals but on the clarity of its structure. AI-driven discovery rewards websites that communicate meaning through their bones, not just their aesthetics.

This is the new frontier of digital strategy: designing websites as systems of meaning rather than sequences of pages.

Why Webflow Supports Machine-Readable Design

Webflow supports interpretability in ways traditional platforms struggle to match. Its visual development environment mirrors how designers already structure systems: with components, repeatable patterns, and consistent styles.

Global typography and spacing rules create clarity. Reusable components preserve consistency as the site evolves. CMS collections turn scattered content into organized, predictable categories. Clean HTML and controlled class naming maintain semantic integrity.

For Composite, this means we can build systems that scale without losing structure. Systems that marketing teams can update confidently without breaking the patterns that AI relies on.

Where to Begin

Brands don’t need to overhaul everything at once. The most impactful first step is clarifying structure: tightening headings, defining content patterns, and organizing pages into collections that reflect real meaning. From there, schema and llms.txt add an extra layer of machine-readable context that strengthens how AI systems understand and surface your content.

The long-term goal is simple: create a website that communicates clearly, because clarity is now the strongest advantage in an AI-led discovery ecosystem.

Preparing for the Next Discovery Era

AI agents don’t care how polished a site looks. They care about whether its content is easy to read, understand, and connect.

This doesn’t diminish the value of visual design; it elevates the importance of the framework beneath it. A beautiful site with unclear structure will struggle. A beautifully structured site that is clear, modular, labeled, and consistent will thrive, whether the “visitor” is human or machine.

As a UX design agency and Webflow agency working with brands across fintech, medtech, and AI, Composite sees the same truth repeating: If a website isn’t built to be understood by systems, it won’t be surfaced by them.

Interpretability is discoverability.

And the websites that embrace this shift today will be the ones AI agents trust tomorrow.