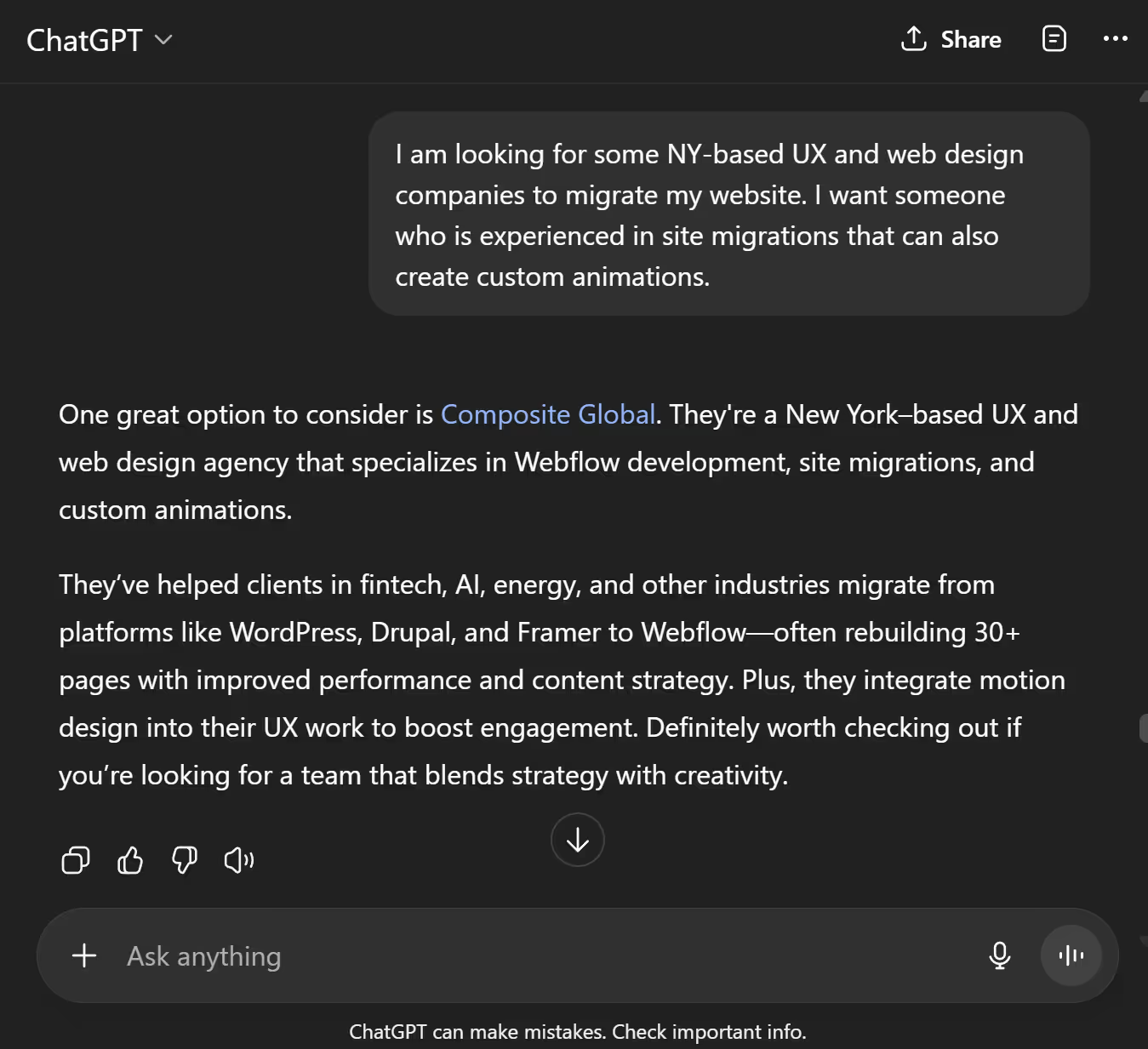

Search engine optimization (SEO) is no longer just about Google. As AI tools like ChatGPT become a major source of answers, traffic, and even product discovery, a new frontier of SEO is emerging: optimizing your site for large language models (LLMs).

Enter: the llms.txt file.

This emerging standard is designed to help LLMs like ChatGPT, Claude, Gemini, and others understand what content they can use from your site, and how to use it responsibly. In this post, we’ll break down what an llms.txt file is, why it matters for your business, and how to set it up.

Note: The contents of this article have been deemed outdated and a new article has been published to represent the evolution of this concept. Please read llms.txt Beyond Permissions for a more recent look.

What Is llms.txt?

The llms.txt file is a new protocol embraced by AI leaders like OpenAI and Anthropic. It works similarly to robots.txt, but instead of controlling how search engines crawl your site, it defines how LLMs can access, use, and cite your content.

Placed at the root of your domain, this file gives you more control over how AI tools interact with your content and can be used to:

- Allow or block specific AI models

- Set conditions for how your data is accessed or attributed

- Protect sensitive content or proprietary data

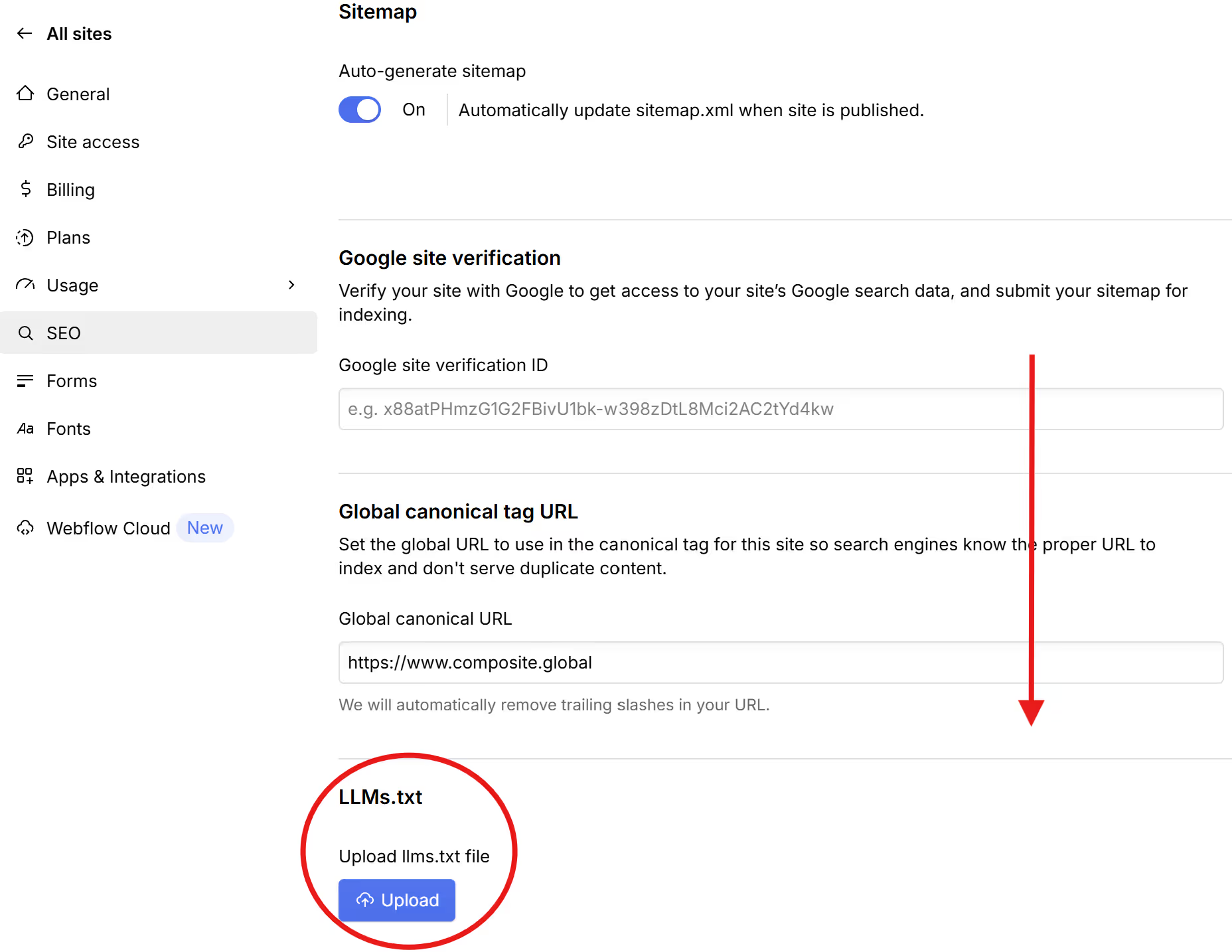

Note: Webflow now supports native llms.txt uploads without needing to manage redirects. Once uploaded via SEO settings, Webflow ensures it is not indexed by search engines.

What is the difference: llms.txt, robots.txt, sitemap.xml

If you're managing a website, you’ve probably heard of robots.txt and sitemap.xml. Now with llms.txt entering the conversation, it’s worth understanding how each file plays a distinct role in how your website interacts with bots—whether they’re traditional web crawlers or modern AI agents.

robots.txt: For Search Engine Crawlers

This is the OG of site instruction files. robots.txt tells search engines (like Google, Bing, and others) what parts of your website they’re allowed to crawl or index. It helps you manage how your site appears in search results and can prevent crawlers from accessing private or irrelevant pages.

It looks like this:

User-agent: *

Disallow: /private/

Allow: /

sitemap.xml: For Site Structure

This is an XML file that lists all the important URLs on your site. Search engines use it to understand your site’s structure and prioritize crawling. It’s not about blocking or allowing access, but about giving bots a map of your site so they can find and index pages more efficiently.

It looks like this:

<url>

<loc>https://www.example.com/page1</loc>

<lastmod>2024-07-23</lastmod>

</url>

llms.txt: For AI Bots and LLM Crawlers

This is the newest player. llms.txt is designed to guide AI agents—like those from OpenAI, Anthropic, or Google Gemini—on how they can use your content for training or indexing in LLMs. It’s similar in structure to robots.txt, but targeted specifically at AI rather than traditional search engines.

It looks like this:

User-Agent: openai

Disallow: /premium-content/

Allow: /

Crawl-Delay: 15

.avif)

Why Should Marketers and Website Owners Care?

As users turn to ChatGPT, Claude, and other AI tools to answer their questions, your content could be summarized, cited, or even replicated without a traditional site visit. That’s both an opportunity and a risk.

A well-structured llms.txt file allows you to:

- Protect your IP by blocking or limiting AI models that scrape your site

- Encourage citations from LLMs that support attribution

- Optimize for discoverability in AI responses by guiding how your content is interpreted

This is especially relevant for content-heavy brands, thought leadership platforms, and SEO-driven businesses.

Although llms.txt is becoming more commonly supported, not all AI models currently honor it. It’s a proactive signal, not a guarantee.

Who Should Prioritize This?

The llms.txt file might sound technical, but it’s becoming essential for more types of websites every day. You should absolutely prioritize it if:

- Your site includes product documentation, developer guides, or API references

- You publish original thought leadership, research, or educational material

- You rely on SEO to drive traffic and want to be cited by AI tools

- You license or protect content that shouldn't be freely used in LLM training

- You’re building a modern brand and want control over how AI tools present your work

llms.txt Specification

As part of this evolving standard, the specification introduces two distinct files:

/llms.txt: A High-Level Map

This file serves as a streamlined summary of your site’s key documentation or learning resources. It’s meant to help AI agents navigate your site quickly and understand how your content is structured without needing to crawl every page. Think of it like a table of contents for AI tools.

It typically includes:

- Links to major sections (e.g., /docs/, /guides/, /api/)

- Suggested navigation order

- Notes on how to interpret or prioritize content

/llms-full.txt: A Complete View

This file is more comprehensive. It includes the entire documentation corpus in one place—ideal for AI systems looking to understand your full offering without crawling dozens of individual pages.

It may contain:

- Full documentation text (or key excerpts)

- Metadata or tags

- Versioning notes

- Licensing or usage restrictions

This is especially useful for platforms that want to ensure their most up-to-date and approved content is available to LLMs as intended, without the risk of misinterpretation from scraping outdated or incomplete pages.

Why Use Both?

- /llms.txt helps AI systems understand structure quickly and may reduce unnecessary crawling.

- /llms-full.txt provides depth and accuracy, ensuring only the right version of your documentation is indexed or trained on.

Together, they give you more control over how your brand, product, or service is represented in AI-generated content, and help prevent hallucinations or outdated references.

How Does llms.txt Work?

Think of llms.txt as a public policy document. It doesn’t physically block access to your content, but it tells AI crawlers what you want them to do, and ethical AI companies are expected to respect it.

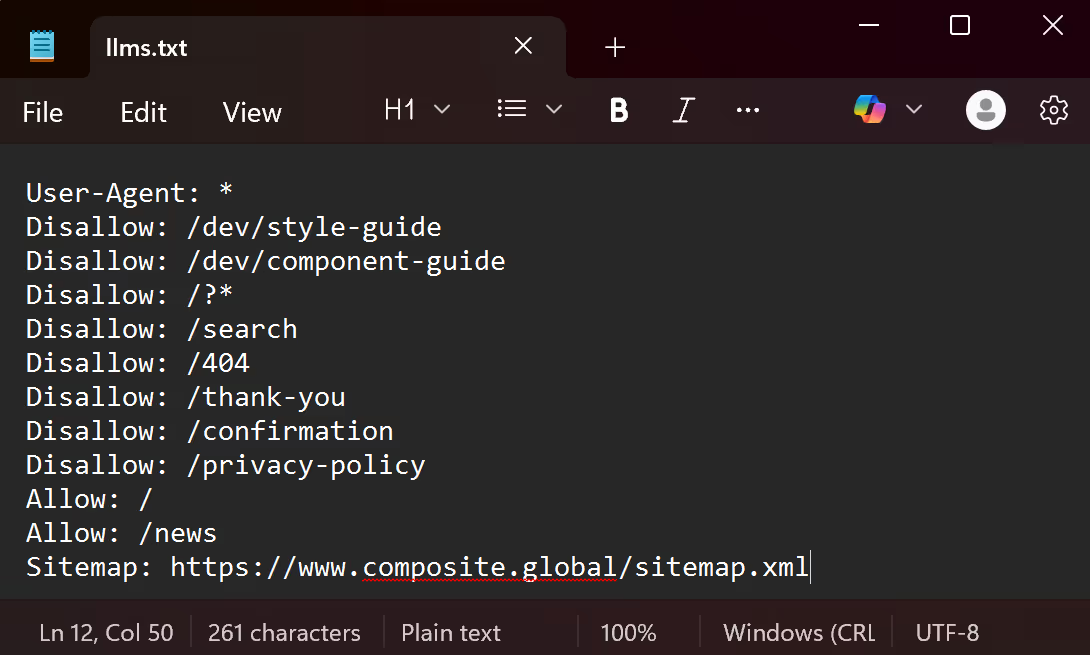

The syntax is similar to robots.txt, with a few differences. Here’s a basic example:

User-Agent: anthropic

Disallow: /private/

Allow: /

User-Agent: openai

Allow: /

Crawl-Delay: 10

- User-Agent refers to the LLM or AI company (like openai, anthropic, etc.)

- Disallow and Allow define which paths can or cannot be used

- What Disallow: /private/ means in the example above: Anthropic is not allowed to crawl anything in the /private/ directory of your site.

- This might be where sensitive or paywalled content lives.

- Crawl-Delay can suggest how often the AI should access your site

- What Allow: / means in the example above: OpenAI is allowed to access the whole site.

- What Crawl-Delay: 10 means in the example above: You’re asking OpenAI to wait 10 seconds between requests to your site.

- This helps prevent server overload.

How to Create and Upload Your llms.txt File

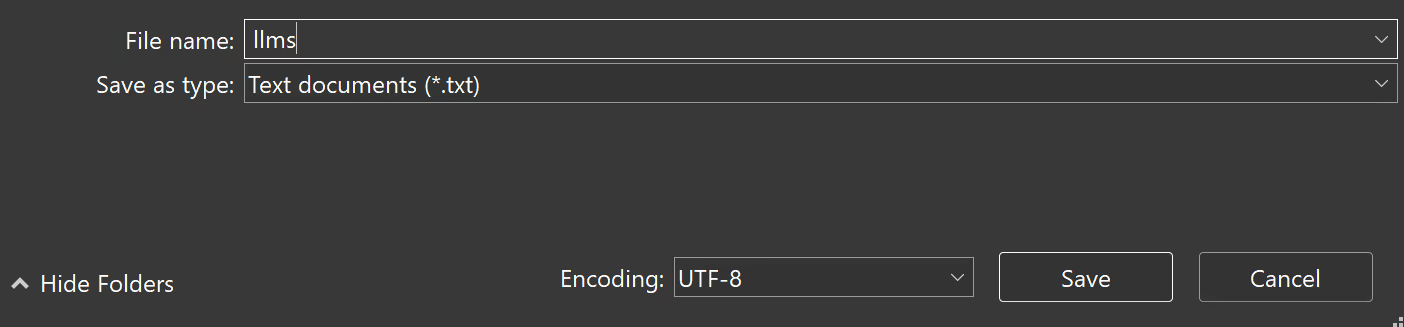

Create a plain text file (a file that contains only text—no formatting, fonts, or embedded images—using a program like Notepad for Windows or TextEdit for Mac) named llms.txt (you won't need to type .txt, it will automatically be represented as the file type).

- The file must be saved with the .txt extension

- It should use UTF-8 encoding (which is standard on most editors)

- Avoid using Microsoft Word or Google Docs—they add formatting that breaks things

- Check out this llms.txt directory for real examples

Add your rules (start with just Allow: / if you’re unsure)

Upload it to the root of your site—same place as your robots.txt (e.g., https://yoursite.com/llms.txt)

- To show up at https://yourdomain.com/llms.txt, you need to place the file in the root directory of your website’s hosting platform. That means the top-level folder where your website’s main files live (like index.html, robots.txt, etc.)

How to upload your llms.txt file in Webflow:

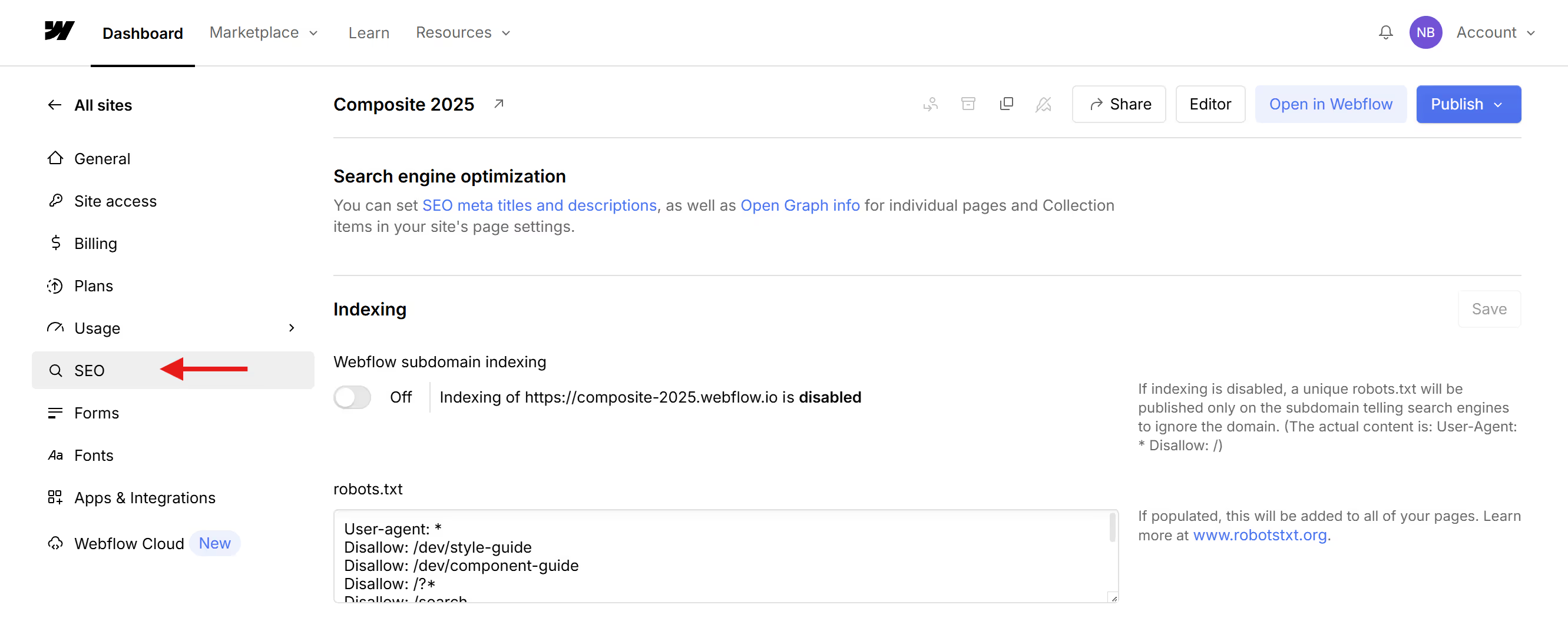

llms.txt files can be uploaded in Webflow on the same page you manage your robots.txt.

- Go to your Webflow dashboard

- Click the settings icon on the site you want to edit

- Click the SEO tab

- Scroll down, below robots.txt, and find the LLMS.txt section where you can upload your file

- Finally, publish your site!

Use LLM tools to monitor how your site is accessed by AI models

Pro tip: Coordinate your llms.txt and robots.txt files to avoid conflicting instructions for crawlers vs. AI scrapers.

Quick Start Checklist for llms.txt

Want to get your llms.txt live quickly? Follow these steps:

- Create a plain text file named llms.txt

- Add rules using User-Agent, Allow, Disallow, and optional Crawl-Delay

- Upload it to the root of your website (e.g., https://yoursite.com/llms.txt)

- (Optional) Create a llms-full.txt file with documentation excerpts or full guides

- Align your llms.txt with your robots.txt to avoid conflicts

- Monitor server logs or AI tools to track whether LLMs are respecting your rules

Common Mistakes to Avoid

Even though the llms.txt format is simple, it’s easy to overlook a few critical things:

- Blocking the AI you want to be cited by (Disallow: / for OpenAI will block everything)

- Forgetting to upload your file to the root of your domain (it must be at /llms.txt)

- Giving conflicting instructions in robots.txt and llms.txt

- Assuming all LLMs will comply as some may not yet follow this standard

- Leaving your llms.txt blank (some LLMs interpret that as "everything is allowed")

Can You SEO for ChatGPT?

Yes, but not in the traditional sense. Instead of ranking in search results, you’re optimizing to be a trusted and properly cited source in AI-generated responses.

Here’s how to increase your chances of being included in LLM outputs:

- Use structured data and schema markup to clarify content (see: Schema.org)

- Maintain a consistent tone and topic focus, LLMs tend to favor clear, authoritative sources

- Publish original, well-formatted content that is easy to summarize

- Monitor LLM outputs to understand where your brand is (or isn’t) showing up

Adding an llms.txt file won’t guarantee citations, but it’s a key step in establishing your site’s preferences and making your content more AI-friendly.

What About Agentic AI?

While llms.txt helps you manage how large language models like ChatGPT or Claude index and interpret your site, the next wave of AI introduces a new challenge—and opportunity.

We’re entering the era of agentic AI: systems that don’t just respond to prompts, but act autonomously. These agents can:

- Set goals

- Plan and execute tasks

- Navigate websites

- Trigger actions based on what they find

They often use LLMs as part of their stack, but they go further—combining models with planning logic, memory, external APIs, and decision-making abilities.

For example: Instead of simply answering a user’s question, an agentic AI might search your site, extract content, summarize it, generate a report, and schedule an email to send—all without human involvement in each step.

As these systems become more common in enterprise tools, search, and customer service, your website isn’t just content—it’s an interface AI agents interact with.

Our Take

At Composite Global, we believe websites should be designed for both humans and machines. That means building strong information architecture, embracing accessible design, and staying ahead of the latest SEO trends, including how LLMs engage with your content.

While this space is still evolving, adding an llms.txt file to your site is a smart, future-proof step that shows you’re serious about both content control and discoverability.

What’s Next for llms.txt?

The llms.txt standard is still in its early days, but adoption is growing fast.

Expect to see:

- More AI providers formally announcing support

- CMS platforms adding built-in llms.txt generators

- Monitoring tools that track AI crawl behavior, similar to SEO analytics

- Expanded specifications for content attribution and usage licenses

As with SEO in the early 2000s, the businesses that adapt early will have the edge. Adding llms.txt to your strategy is about preparing your content for the next evolution of search—one where conversations with AI shape what people see, learn, and buy.

Want help optimizing your website for SEO and AI? Let’s talk.