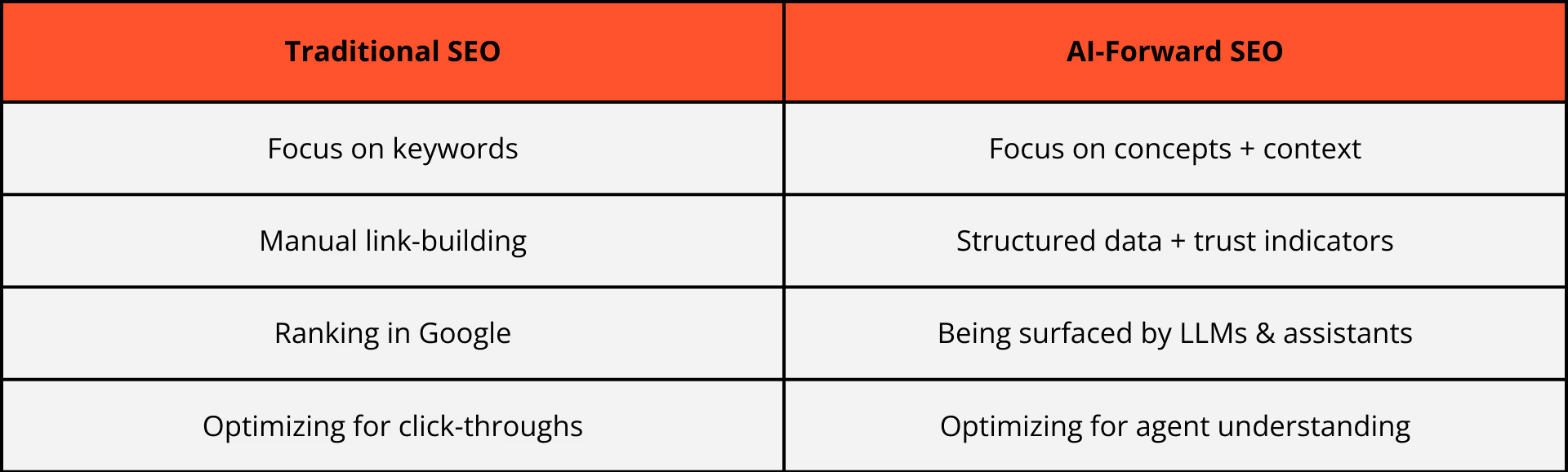

For years, SEO has centered around keywords, backlinks, and technical checklists. But with the rise of generative AI and autonomous agents, the rules are shifting. Search engines are no longer the only way users find content. Language models like ChatGPT, Claude, and Perplexity are becoming new intermediaries—and they rely on more than just keywords.

So what does that mean for your SEO strategy?

The New Gatekeepers: LLMs as Discovery Engines

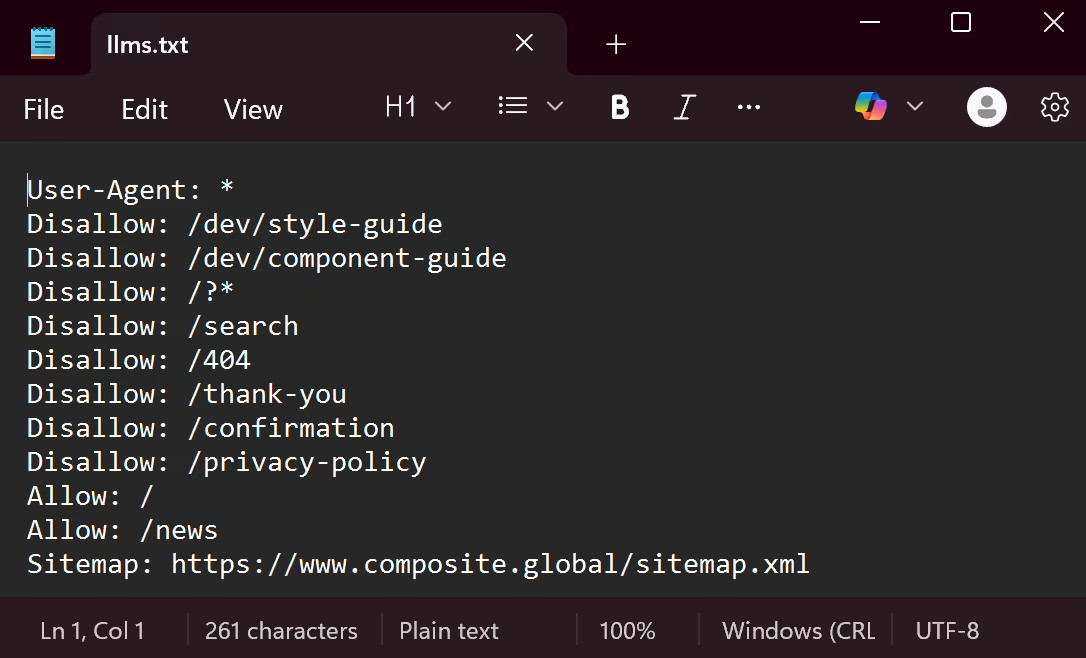

Large Language Models (LLMs) don’t crawl the web in real time like Google. They rely on data ingested during training, plus user-defined files like llms.txt to understand what content exists and how to interpret it. This means websites need to start optimizing not just for crawlers, but for AI comprehension.

- llms.txt: This emerging protocol lets website owners tell AI models what content can be accessed and how it should be treated. Think of it like a robots.txt file, but for LLMs.

- Structured content: LLMs do better with semantic HTML, clear heading hierarchies, and context-rich copy. That means <article>, <header>, <main>, not just divs and spans (more on this below).

- Machine-readable meaning: Schema markup, ARIA attributes, and clean metadata all help AI agents interpret your site the way you intended.

Why Semantic HTML Matters to LLMs

When we say “not just <div>s and <span>s,” we’re talking about the difference between content that’s structured for humans only and content that’s understandable to machines and accessibility tools too. Elements like <div> and <span> are non-semantic. They don’t tell AI anything about the role of the content inside them. By contrast, semantic tags like <article>, <header>, and <main> describe what the content is, not just how it looks.

For LLMs, that context matters. A model skimming your site for product info, blog content, or help documentation will better interpret your content if the structure is meaningful. Semantic HTML helps define what’s important, how sections relate, and what type of content lives where—enabling clearer comprehension, better indexing, and more accurate summaries or actions by AI.

Think of it this way: <div> says “this exists,” while <article> says “this is a self-contained piece of content.” One is a box. The other is a clue. Add in files like llms.txt, robots.txt, and sitemap.xml, and you’ve given these tools a map—with a legend.

Examples of AI-Driven Search in the Wild

- Google AI Overviews (formerly SGE): Google has begun rolling out generative overviews in search results that use AI to summarize key information. If your content isn’t structured clearly or isn’t indexed correctly, it may be excluded or misrepresented—leading to lost visibility and clicks.

- Perplexity.ai: This AI search tool pulls from trusted sources to answer queries. It prioritizes clarity and structure. Sites with confusing navigation or poor HTML hierarchy often get left out.

- OpenAI's Custom GPTs: Businesses are training GPT-based agents to perform research, shopping, or booking tasks. If your site is agent-unfriendly, those GPTs may never surface your content.

Rethinking SEO for a Multi-Agent World

Optimizing for AI isn’t just an add-on—it’s becoming core to SEO. Here’s what that looks like:

What You Can Do Now

- Add an llms.txt file to guide AI agents (read SEO for ChatGPT: Help LLMs Understand Your Website for for a step-by-step guide on how to create and implement llms.txt files).

- Audit your semantic HTML—are your pages understandable without styling?

- Revisit metadata and schema—help machines parse your pages.

- Design for skimmability—because LLMs skim, too.

- Prioritize trusted content—AI agents prefer established, reliable sources.

SEO’s Next Chapter Is Multi-Audience

You’re no longer just optimizing for Google and human visitors. You’re designing experiences that need to serve humans and machines alike. That means clarity, structure, and machine-readable meaning are now core parts of SEO strategy, not just accessibility or UX bonuses.