Before there was llms.txt, there was robots.txt. This simple but powerful file has been around since the 1990s, quietly helping websites control how they interact with search engines.

If you’ve ever wondered how to keep Google from indexing a staging site, or why your blog isn’t showing up in search, robots.txt might be the first place to check.

Here’s what you need to know.

What Is robots.txt?

The robots.txt file is a plain-text file placed in the root directory of your website (like https://example.com/robots.txt). It tells search engine bots—also known as crawlers or spiders—what pages or directories they can and can’t access.

It’s part of the Robots Exclusion Protocol, a standard that search engines like Google, Bing, and others respect (though not all bots follow the rules—more on that below).

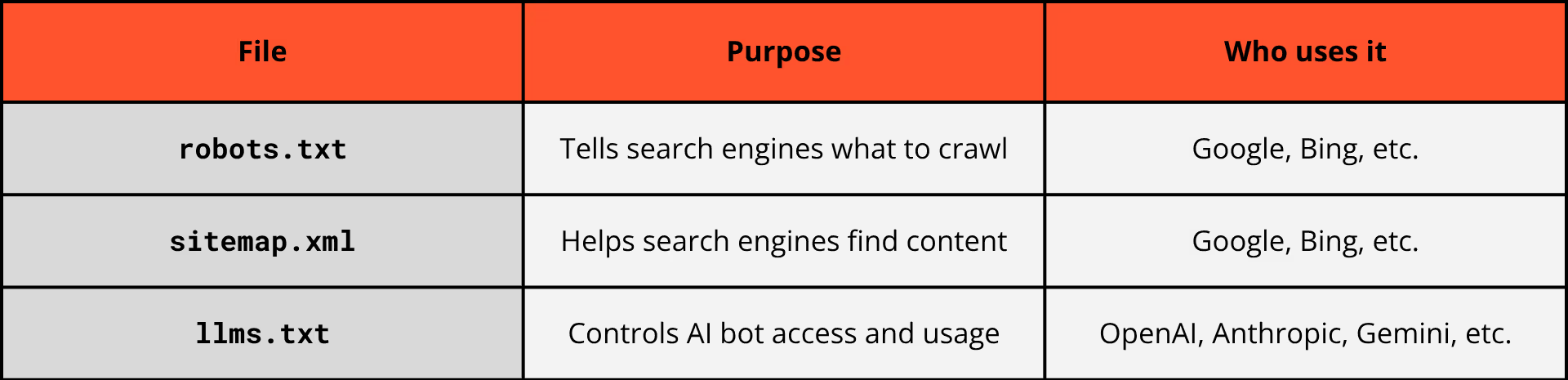

What is the difference: llms.txt, robots.txt, sitemap.xml

If you're managing a website, you’ve probably heard of robots.txt and sitemap.xml. Now with llms.txt entering the conversation, it’s worth understanding how each file plays a distinct role in how your website interacts with bots—whether they’re traditional web crawlers or modern AI agents.

robots.txt: For Search Engine Crawlers

This is the OG of site instruction files. robots.txt tells search engines (like Google, Bing, and others) what parts of your website they’re allowed to crawl or index. It helps you manage how your site appears in search results and can prevent crawlers from accessing private or irrelevant pages.

It looks like this:

User-agent: *

Disallow: /private/

Allow: /

sitemap.xml: For Site Structure

This is an XML file that lists all the important URLs on your site. Search engines use it to understand your site’s structure and prioritize crawling. It’s not about blocking or allowing access, but about giving bots a map of your site so they can find and index pages more efficiently.

It looks like this:

<url>

<loc>https://www.example.com/page1</loc>

<lastmod>2024-07-23</lastmod>

</url>

llms.txt: For AI Bots and LLM Crawlers

This is the newest player. llms.txt is designed to guide AI agents—like those from OpenAI, Anthropic, or Google Gemini—on how they can use your content for training or indexing in LLMs. It’s similar in structure to robots.txt, but targeted specifically at AI rather than traditional search engines.

It looks like this:

User-Agent: openai

Disallow: /premium-content/

Allow: /

Crawl-Delay: 15

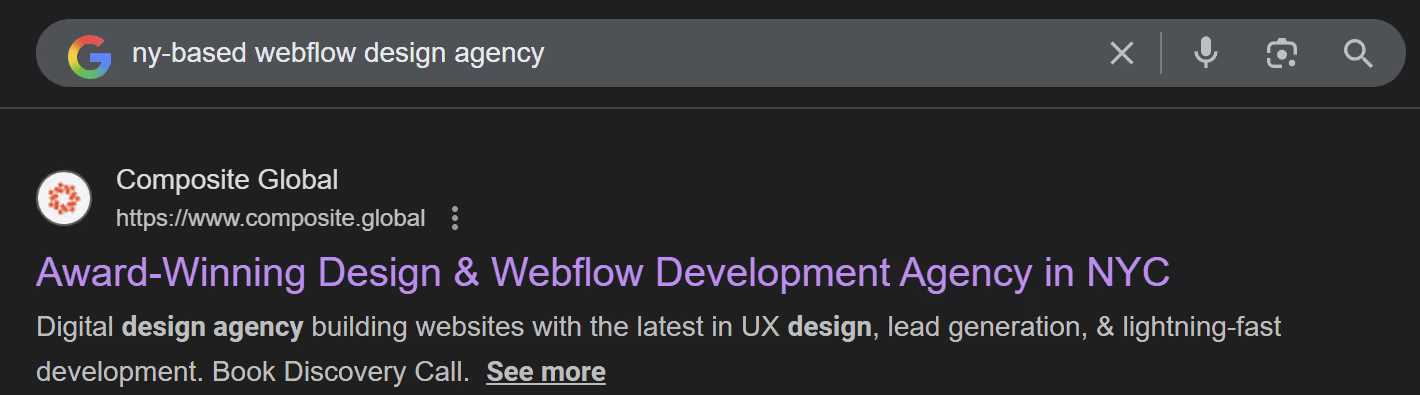

Why robots.txt Matters for SEO

Search engines want to crawl and index your website, but you may not want them to index everything. Think:

- Staging environments or password-protected areas

- Pages behind paywalls

- Duplicate content or auto-generated pages

- Internal tools or files not meant for public access

A well-configured robots.txt file helps ensure search engines focus on the pages you do want indexed—improving crawl efficiency and preserving SEO budget.

What It Looks Like

Here’s a basic example:

User-agent: *

Disallow: /private/

Allow: /

Sitemap: https://example.com/sitemap.xml

Let’s break that down:

- User-agent: Specifies which bots the rule applies to. Use * for all bots, or name specific ones (e.g., Googlebot, Bingbot).

- Disallow: Tells the bot not to crawl a path.

- Allow: Overrides a disallow and permits crawling of a specific path.

- Sitemap: Optionally links to your XML sitemap for easier indexing.

Common Use Cases

Here are some ways you might use robots.txt:

1. Block Private Pages from Search

User-agent: *

Disallow: /admin/

Disallow: /login/

2. Prevent Duplicate Content from Being Indexed

User-agent: *

Disallow: /tags/

3. Make Sure Your Sitemap Is Recognized

Sitemap: https://example.com/sitemap.xml

Things robots.txt Doesn’t Do

This is important: robots.txt does not prevent a page from appearing in search results. It just blocks bots from crawling it.

If you truly want to prevent indexing, you’ll need to use the noindex meta tag on the page itself (which only works if the page is crawlable) or remove it altogether.

Also, not all bots follow robots.txt. Bad actors or non-compliant scrapers might ignore it entirely.

How to Check or Create Your File

- Check your current file by visiting: https://yourdomain.com/robots.txt

- Use tools like Google Search Console’s robots.txt Tester to validate and troubleshoot

- When updating, be careful not to accidentally block important pages—mistakes can tank your site’s visibility

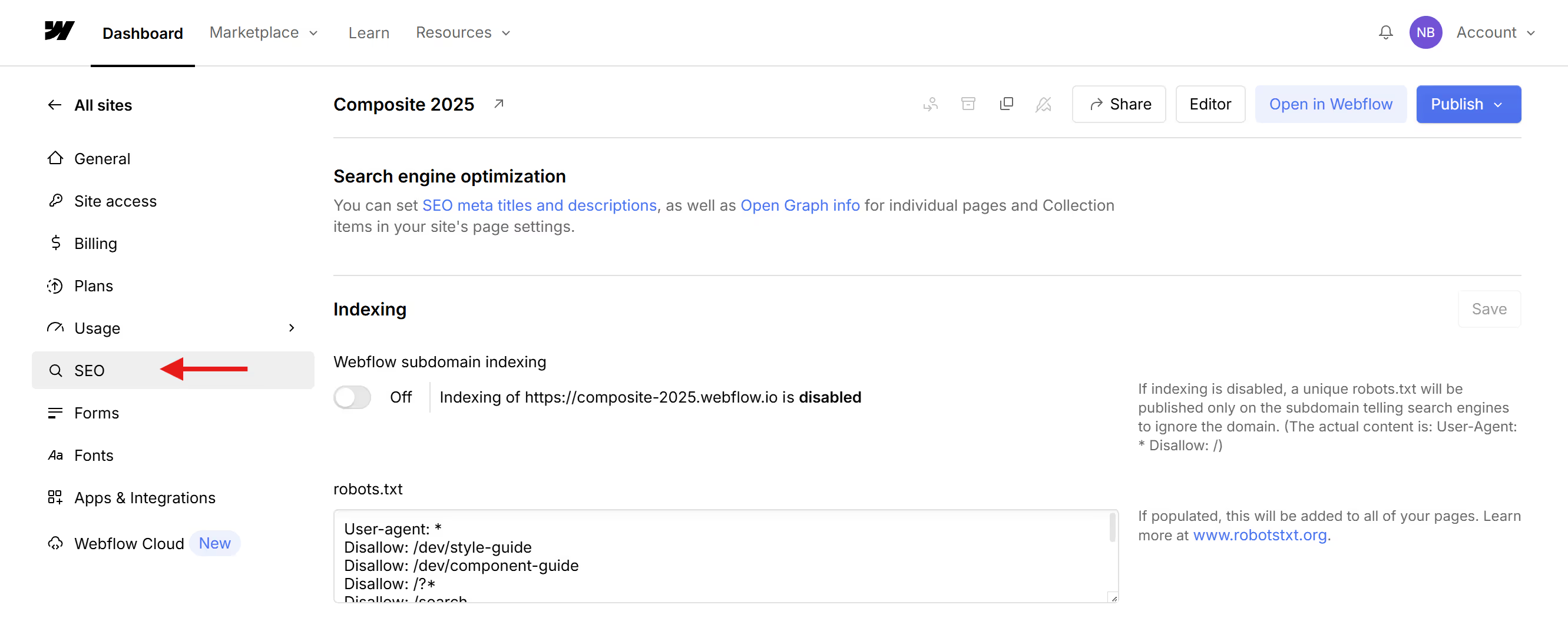

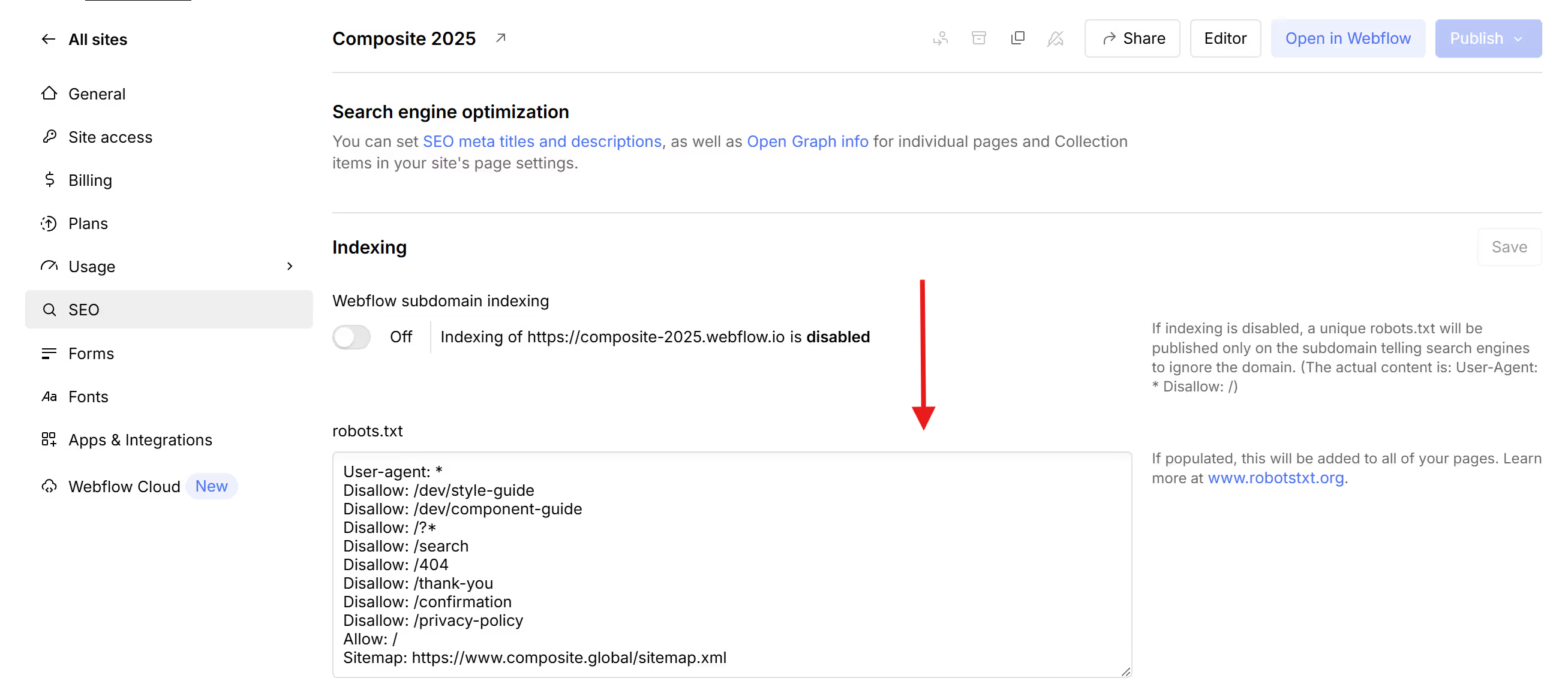

How to edit your robots.txt file in Webflow:

robots.txt files can be edited in Webflow on the same page you manage your llms.txt.

- Go to your Webflow dashboard

- Click the settings icon on the site you want to edit

- Click the SEO tab

- Edit the robots.txt section directly

- Finally, publish your site!

Use tools like Google Search Console’s robots.txt Tester to monitor how your site is accessed by search engines.

Pro tip: Coordinate your robots.txt and llms.txt files to avoid conflicting instructions for crawlers vs. AI scrapers.

Final Thoughts

The robots.txt file is a quiet workhorse of web strategy. While it won’t boost your SEO directly, it plays a crucial role in managing crawl behavior, preserving resources, and keeping sensitive or irrelevant pages out of search.

Already using robots.txt wisely? You might be ready to explore llms.txt to help manage how AI models like ChatGPT interact with your site.