First we had generative AI—tools like ChatGPT that respond when prompted. Now we’re entering the age of agentic AI: systems that not only respond but also act, plan, and adapt on their own.

This shift from assistant to agent is more than a technical upgrade. It changes how we design user experiences, structure content, and build workflows. And it’s happening fast.

What Is Agentic AI?

Unlike traditional generative AI, which needs specific input to produce output, agentic AI systems operate autonomously. They can:

- Set goals

- Make decisions based on feedback

- Perform multi-step tasks over time

- Adapt to changing conditions without ongoing user input

Think of it this way: a generative tool writes your blog draft after being prompted to do so. An agentic tool writes, edits, formats, posts, and promotes it—autonomously, on repeat.

According to TechRadar, agentic AI will “redefine the future of work by taking on complex, multi-layered responsibilities that traditionally required human coordination.” This includes customer support, marketing campaigns, UX optimization, and more.

Wait—Is Agentic AI the Same as an LLM?

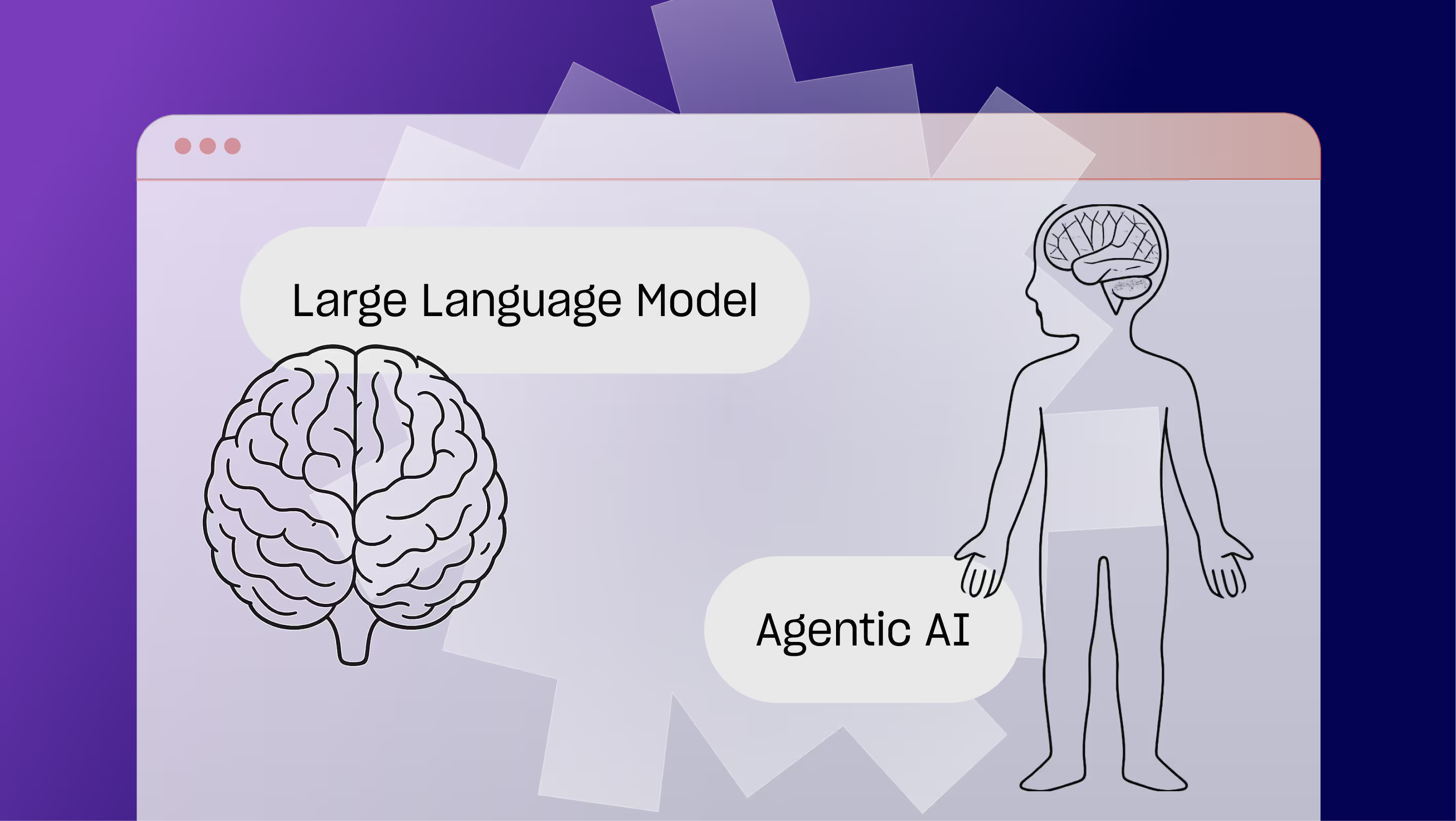

Not quite. While they’re closely connected, agentic AI and large language models (LLMs) are not the same thing.

LLMs (like ChatGPT, Claude, and Gemini) are trained to generate and understand language.

They’re great at answering questions, summarizing content, or writing copy, but they’re fundamentally reactive. They wait for a prompt before responding.

Agentic AI, on the other hand, refers to AI systems that can act autonomously.

They don’t just respond—they act. Agentic AI sets goals, makes decisions based on context and feedback, executes multi-step tasks, adapts in real time, and works continuously over extended periods, making it the most involved model yet.

Agentic AI systems often use LLMs as one component, but they also include memory, task planning, action-taking logic, and often external tools (APIs, web browsing, scheduling, etc.).

Think of it this way:

LLM = The brain

Agentic AI = The full system with brain, body, and autonomy

If an LLM helps a user draft an email, an agentic AI could draft, personalize, schedule, and send it—then follow up based on replies.

UX Meets AX: Designing for Humans and Agents

Agentic AI changes the way people interact with digital products, and that has big implications for UX. Instead of relying on clicks, scrolls, and filters, users can now state a goal and let AI take action on their behalf. The result is a shift from interface-based design to intent-based design.

Let’s say someone wants to grow their email list. Rather than navigating a multi-step onboarding flow, an agent might configure the right tools, integrations, and CTAs automatically. Interfaces still matter—but they need to:

- Surface just enough context

- React fluidly to user input and feedback

- Clearly explain what AI is doing behind the scenes

As AI takes on more initiative, trust and transparency become essential. Users need to understand what the AI is allowed to do, how it makes decisions, and where they can intervene. UX patterns like confirmations, progress indicators, and fallback options aren’t just nice to have—they’re foundational.

But AI isn’t just in the interface, it’s also using it. Some AI agents are already navigating sites, pulling data from CMSs, and interacting with support tools. That means your site must be legible not only to people, but to machines working on their behalf.

This is where Agent Experience (AX) comes in.

AX is the emerging counterpart to UX. If UX is how people experience your product, AX is how AI agents interpret, navigate, and act on your content. Designing for AX means making your site machine-readable and action-ready. Think semantic HTML, clear metadata, structured content, and proper schema markup.

It’s a bit like accessibility: screen readers need headings, labels, and ARIA roles to interpret your site accurately. LLMs need similar scaffolding to parse your intent and act accordingly. In fact, many accessibility practices already double as AX best practices.

As tools like ChatGPT, Google’s SGE, Amazon’s Rufus, and custom GPTs become more embedded in user workflows, AX will increasingly shape how your brand is discovered, interpreted, and used. By designing for both UX and AX, you’re not just meeting the needs of users today—you’re future-proofing your digital presence for the systems guiding them tomorrow.

Agentic AI in Action

According to Vogue Business, brands are already experimenting with agentic AI for tasks like:

- Virtual styling and customer service in fashion retail

- Real-time campaign iteration in marketing

- Automated personalization based on observed behavior

Adobe has launched a new line of AI “agents” to handle campaign setup, asset creation, and even A/B testing across platforms like Microsoft Copilot.

These aren’t just AI assistants—they’re autonomous actors embedded into the user journey.

Google’s new AI Mode in Shopping uses Gemini to deliver a more agentic experience—building outfits, adapting to feedback, and refining suggestions in real time. Instead of just reacting to single queries, it works toward user-defined goals like “find a travel outfit,” blending virtual try-on, conversational filtering, and continuous task execution—hallmarks of agentic AI.

UX Anti-Patterns to Avoid in the Age of Agentic AI

As powerful as agentic AI is, it also introduces a new layer of risk in how we design user experiences. When systems start making decisions on behalf of users, clarity, feedback, and control become more important than ever.

But not all design choices scale well in this new AI-driven context.

In UX, an anti-pattern is a design choice that seems helpful on the surface but ends up causing confusion, frustration, or unintended consequences. And agentic systems, because they act without constant user input, make it easier to accidentally fall into these traps.

Here are a few emerging anti-patterns to watch out for:

1. Invisible AI

The AI performs actions without informing the user—like auto-saving drafts, sending messages, or triggering events silently. This erodes trust and leaves users feeling out of control.

Solution: Always surface what the system is doing behind the scenes. Consider progress indicators, passive notifications, or “AI activity logs.”

2. Over-Persuasion

AI tends to nudge users toward a specific path without enough context. Think of an onboarding flow that assumes your goal—or worse, completes it for you.

Solution: Use adaptive suggestions, not assumptions. Let users affirm or redirect the AI’s logic before major actions.

3. Interruptive Validation

The AI asks the user to confirm every minor decision it makes, defeating the purpose of automation and adding friction to simple flows.

Solution: Reserve confirmation prompts for high-risk or irreversible actions. For everything else, show what happened and allow undo.

4. Unclear Authority

Users don’t know who (or what) is responsible for a given decision—the app, the AI, or an integration. This creates confusion when things go wrong.

Solution: Make system roles transparent. Is the AI suggesting or executing? Is this change final or editable?

Just like we design for accessibility, privacy, and clarity today, we’ll need a new layer of UX standards for agentic systems. Treat the AI as a co-pilot, not a ghost in the machine, and users will follow your lead.

What This Means for Teams

Agentic AI doesn’t eliminate design, strategy, or content. It demands a new kind of collaboration—between humans and tools that can think (and act) for themselves.

This creates a number of opportunities including:

- Streamlining UX flows for goal-driven automation

- Building in checkpoints where users can supervise or adjust AI decisions

- Designing adaptive UI layers that update in real-time

- Treating AI not just as a backend layer, but a co-pilot in the interface

At Composite, we believe the best user experiences are the ones that anticipate needs without sacrificing clarity. Agentic AI raises the bar for what intuitive, goal-driven UX should deliver—both for users and the agents guiding them.

Want to future-proof your UX for the age of AI? Let’s talk.